Hardware Bottlenecks and Neural Networks

Training deep neural networks takes a long time. Since data science is largely a game of hyperparameter tuning, ensuring your networks train as fast as possible allows you to carry out more experiments and iterate quicker. Once your model and data pipeline is ready, making sure your CPU and storage don’t bottleneck the training process are important to ensure efficiency.

The data pipeline

A typical deep learning data pipeline1 goes something as follows:

- Read data from the disk.

- Apply pre-processing on it.

- Copy the data to GPU.

- Perform the forward and backward passes of backpropogation.

- Copy the results back to CPU memory.

These steps are repeated till the network converges. Hardware bottlenecks are most likely during steps 1 and 2.

The tools of the trade

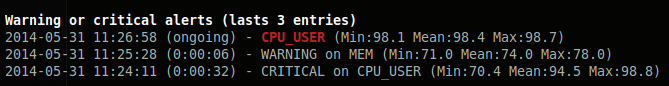

My favorite tool for monitoring a linux system is glances. The best feature of

glances are events which tell you if any single resource is being

over-utilized. Regularly running the glances command in the background while training is a good sanity check to ensure things

are running smoothly.

The other tool you should familiarize yourself with is nvidia-smi, which provides data about all the

gpu’s connected to your system. It has a lot of configuration options, the most useful of which is probably dmon, which gives

per tick info about the gpu.

High CPU Usage Event in glances

Finding bottlenecks

If your GPU is spending too many cycles doing nothing, or if you notice a significant delay between loading 2 consecutive

batches to the GPU this could indicate a bottleneck. One way of finding bottlenecks is to periodically run glances in

the background and ensure that the training process is not raising any HIGH CPU USER or HIGH CPU IO WAITING events.

Another check is to ensure that running the nvidia-smi dmon command does not show too many consecutive ticks where the gpu usage is

completely zero.

Solving bottlenecks

CPU bottlenecks

CPU bottlenecks are usually caused due to performing too many computations on the fly before the data is copied to the GPU’s VRAM. If you are performing augmentations on your image data (for example random crops and flips), this process may cause a delay before the data is finally copied to the GPU. Fixing this may require either using simpler augments or if that doesn’t help, in the worst case, you just might have to perform all the augmentations and dump them to disk first, before you start training.

Preprocessing data usually provides a huge boost in CPU processing times. If you want to fine tune multiple CNN’s on thousands of videos, dumping the raw frames to disk beforehand is definitely a worthy one-time investment to make.

Storage bottlenecks

Storage bottlenecks are caused by performing operations synchronously or using a slow spinning disk. Most modern frameworks

provide constructs(dataloaders in pytorch and Dataset.prefetch in tensorflow2) which help pass data asynchronously to the GPU while the current batch is training, helping improve performance significantly. Using multiple processes speeds up this process even more,

though eventually you will hit a point of diminishing returns.

while the training step is running for batch n, the pipeline is reading the data for the batch n+1

Copying data to an SSD also helps a lot if your data is scattered in lots of small files and require special logic to read(like .flo files)

Data formats used to store files also affect file reading speed, the best file formats being those which store data in an

uncompressed format. This uses a lot of space, but helps speed up data loading significantly when you have hundreds of

gigabytes of data. The best file formats for fast reading of audio are wav and for images are bmp or png (with compression

level 0).

For generic numpy arrays, although the npy and npz files exist, I have found that reading them is usually slow. In such

cases using a database may be necessary. The best database in practice is probably

HDF5. There might be better databases out there, but for all my

projects I have always achieved 100% GPU utilization with HDF5, so I haven’t found the need to find a more efficient one. HDF5

lets you store arbitrary shaped numpy arrays. Also, there are python bindings for hdf5 which make it

convenient to use, although I have found the documentation to be somewhat lacking.

GPU bottlenecks

Since the GPU is usually the most expensive component, the entire training process is largely a process of optimizing the GPU usage. The only GPU bottleneck in this case is when you cannot use a large enough batch size to make meaningful passes with SGD. Large batch sizes usually provide some regularization and in practice I have found them to generalize better. Sadly the only way to address this is to buy more GPU’s or reduce your input dimensions which may not always be possible.

Conclusion

Putting a little thought into optimizing the data flow across your pipeline can help you gain significant performance improvements without spending too much effort.

In another post I’ll describe how I use hdf5 to improve data loading performance in typical image based CNN architectures.

- Icons made by Freepik from www.flaticon.com.

- Image from Tensorflow Dataset documentation.